Home Assistant has declared 2023 as “the year of voice”, setting a bold vision to empower users to interact with Home Assistant and their appliances using their natural language. This initiative aims to transcend the traditional methods of smart home management—moving beyond mere terminal device usage or simple voice commands. The main challenge lies in designing a system which can figures out the user’s own intents through voice language requests to achieve real smart home automation.

Use Home Assistant to build smart home, use LLM to make home smarter

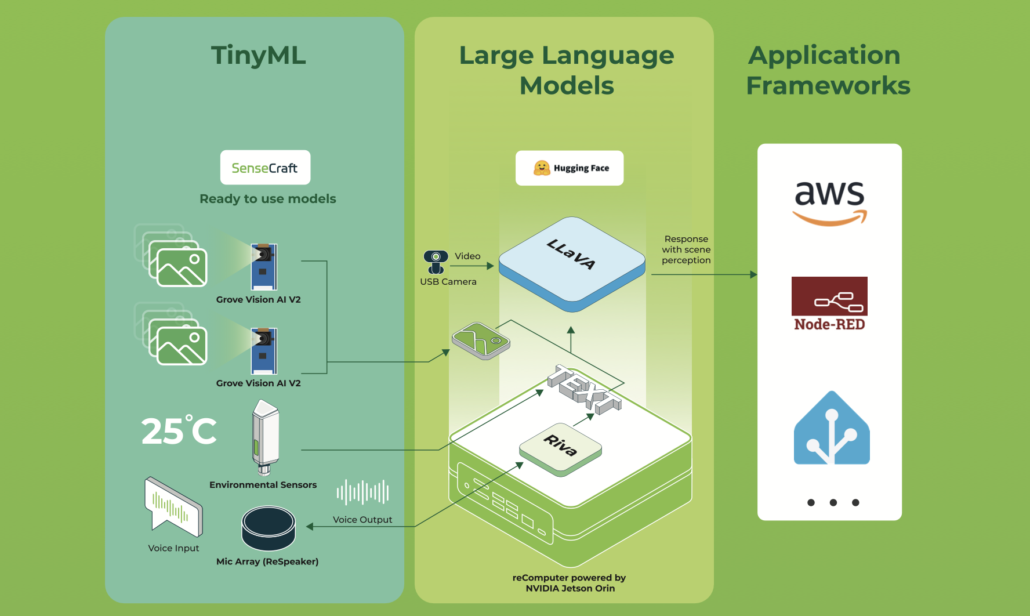

Following the vision of “controlling smart home using our own language”, we built this system, regarded as Local Jarvis, integrating tinyML and LLM(large language model) into Home Assistant as a voice control option. Local Jarvis emerges as an answer to the growing desire for smarter, more private smart home management.

With the Home Assistant MQTT Integration, Home Assistant can connect to AWS IoT Core and a local Greengrass MQTT broker. This means it can work with the cloud, other local Greengrass parts, and devices on the same network. So, Home Assistant can use AWS’s Greengrass, custom ones, and those made by the community, along with AWS services, to make home automation even better and do more things.

Combining Home Assistant with LLMs allows for sophisticated natural language processing without the need for cloud reliance. This approach ensures privacy and reduces latency in command processing. Utilizing advanced language models enables the system to learn from user interactions, making smart home automation not just responsive but anticipatory.

The architecture of combining tinyML and LLM is designed with simplicity and scalability in mind. Starting with voice capture through ReSpeaker, to processing with NVIDIA’s Riva and Jetson AGX Orin, and finally executing commands via Home Assistant Green Gateway, each component plays a critical role in transforming voice inputs into intelligent actions. This streamlined workflow not only ensures privacy and efficiency but also opens up new possibilities for developers to customize and extend their smart home solutions. The system is built with:

- Capturing voice via ReSpeaker, then converting speech to text using Riva.

- Utilizing the NVIDIA Jetson AGX Orin to run LLAMA2, analyzing the text to understand user commands and generate appropriate responses.

Check out our GitHub repo for Local Voice Chatbot project

3. Executing commands: Through the Home Assistant Green Gateway, translate the model’s responses into actions, controlling smart devices accordingly.

4. Using the Home Assistant interface for real-time feedback and control, enhancing user interaction with the smart environment.

Check out our GitHub repo for Local Jarvis project.

At its core, “Local Jarvis” is a response to the growing demand for more intuitive and secure home automation solutions. By leveraging the power of local LLMs, this project sidesteps the privacy concerns associated with cloud-based processing, offering a secure environment where user data never leaves the confines of home. The integration with Home Assistant amplifies this effect, providing a seamless interface for voice commands to control smart devices, making the interaction not just smarter but inherently more natural.

Let large language models figure out what you’re trying to tell your smart home.

You can ask “Nabu,” “Jarvis,””Mirror,” or any name you choose to turn off lights, adjust the thermostat, or execute automations. Beyond single commands, you can instruct Jarvis with tasks such as, “when the postman arrives, leave a message and confirm package receipt.” Moreover, as the smart hub learns from your behavior over time, it will automatically adapt to your lifestyle, becoming increasingly intelligent.

A truly smart home is capable of predicting your requirements and executing tasks smoothly without any need for manual input. The essence of creating such a smart environment lies in automation. Without automation, a smart home simply becomes a set of devices that can be controlled from a distance. Utilizing LLMs, “Local Jarvis” can parse complex commands and engage in context-aware interactions, offering a level of personalization previously unseen in home automation. This capability to adapt and learn from user habits and preferences signifies a shift from reactive to proactive smart homes, where systems not only respond to commands but anticipate needs.

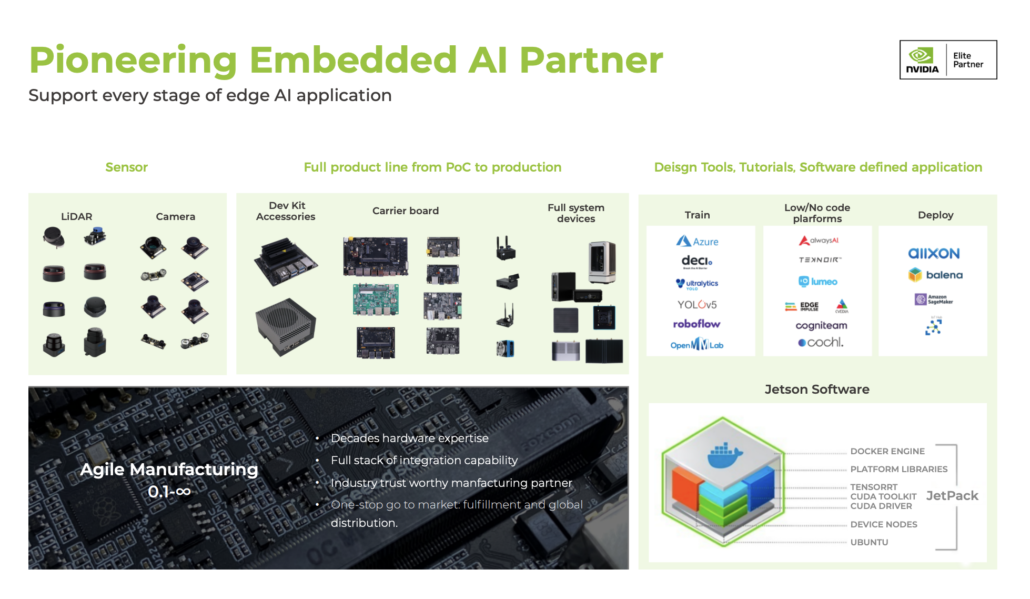

Seeed NVIDIA Jetson Ecosystem

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available. Check out our successful case study catalog to discover more edge AI possibilities!

Discover infinite computer vision application possibilities through our vision AI resource hub!

Take the first step and send us an email at edgeai@seeed.cc to become a part of this exciting journey!

Download our latest Jetson Catalog to find one option that suits you well. If you can’t find the off-the-shelf Jetson hardware solution for your needs, please check out our customization services, and submit a new product inquiry to us at odm@seeed.cc for evaluation.

The post Control Home Assistant with Your Voice: Integrate RIVA, Llama2 to a Smart Home Hub Powered by NVIDIA Jetson appeared first on Latest Open Tech From Seeed.