Video analytics, within the AIoT ecosystem, plays a pivotal role in transforming raw video data into actionable insights with foundational techniques such as face detection and motion detection, aimed at identifying and interpreting basic visual elements within video streams, thus enhancing decision-making, operational efficiency, and security across various sectors.

By integrating AI’s processing capabilities with IoT’s vast network of connected devices, video analytics enables real-time monitoring and analysis for applications ranging from urban planning and traffic management to retail analytics, healthcare, and industrial safety. Video analytics in AIoT lies in its ability to leverage the synergy between AI’s analytical power and IoT’s data-gathering prowess, heading forward to the next step of intelligent monitoring and decision-making solutions.

Combining Generative AI: from Detection to Interpretation

Well nowadays, we are not satisfied with only detecting actions that can be triggered. There are more and more ideas and applications coming up using Large Language models (LLM), which improve video analytics by making systems more accurate and user-friendly.

How Generative AI Empowers Video Analytics

Generative AI introduces a new type of method that helps us understand the world in ways we couldn’t before. These models are great at working with natural language inputs and can help us get a better grasp of content, including images and videos. They’re especially good at learning quickly with zero-shot or few-shot data input training, which changes how we interact with digital content, making it more accessible and comprehensible.

LLM plays a pivotal role in this change. It’s a powerful AI model in the context of natural language processing and machine learning. Trained extensively on diverse textual data, it excels in comprehending and generating human-like language. You can easily facilitate these models to:

- analyze and generate descriptions from video content

- facilitate intuitive search functionalities through voice or text queries

- improve the accessibility and management of video data by translating visual content into comprehensible language.

The integration of LLMs with video analytics platforms enriches user interactions and enhances the automation of content summarization, making video data more searchable, accessible, and actionable for diverse applications ranging from security to retail customer behavior analysis and beyond.

Depth-Interpretation by Leveraging TinyML and LLMs Together

As we showcased recently at NVIDIA GTC 2024, we united the capability of TinyML with Large Language Model deployment, presenting an advanced lightweight AI system with deep, context-aware analysis that can be extended from the edge to the cloud:

●TinyML comes to life through ultra-low power sensors, including cameras and devices that capture environmental and authority data. These sensors feed streamlined, processed data directly into our LLMs.

●Running on edge devices powered by Jetson Orin NX, the LLMs provide sophisticated, context-aware insights and real-time responses, enhancing scene perception with layers of complexity.

●AWS underpins this system, providing the necessary infrastructure for deployment, management, and scalability. In parallel, Node-RED provides a cohesive interface that weaves together disparate components and services, forging a cohesive network of devices.

Get Hands-on Jetson Orin to Experience TinyML & LLM

TinyML

To handle the simple vision AI detection tasks, tinyML with embedded AI components could save us a lot! Check out our SSCMA – the open-source project that gathers optimized AI algorithms designed to run effectively on low-end devices while maintaining impressive speed and accuracy. They cover a wide range of applications from abnormal detection to pose estimation and even customized identification tailored to specific needs. For those interested in exploring these AI solutions, SSCMA is available on GitHub, along with a comprehensive model zoo for direct access.

Live Llava

The Jetson Orin edge device takes these capabilities further by processing and inferring keyframes identified with abnormalities or significant events through images or live video streams. By leveraging Live Llava on the Jetson AGX Orin, developers can achieve a deeper understanding of various situations through a multimodal pipeline and Vision and Language Models (VLMs). This setup enables a more nuanced and comprehensive analysis of video content, extracting pivotal information for advanced content search and interpretation.

TinyML x local LLM – Build Your Smart Home Control Hub

Incorporating LLM into this technology stack unlocks new possibilities for real-world applications. For instance, using LLM, we can interpret and execute voice commands to control an indoor lighting system, demonstrating the practical utility of these advanced models in daily scenarios. This seamless integration of TinyML and LLM with edge devices like Jetson Orin exemplifies cutting-edge AI technology, bridging the gap between digital intelligence and physical world applications.

Conclusion

The integration of AIoT with video analytics, leveraging Large Language Models (LLMs) and tinyML, marks a significant leap in transforming video data into actionable insights. This integration enhances decision-making and security across various sectors, with technologies like those developed by Seeed Studio showcasing the future potential. As we move forward, these advancements with Jetson Edge deployment promise to revolutionize how we interact with and manage video content, setting the stage for smarter, more efficient, and more private ecosystems.

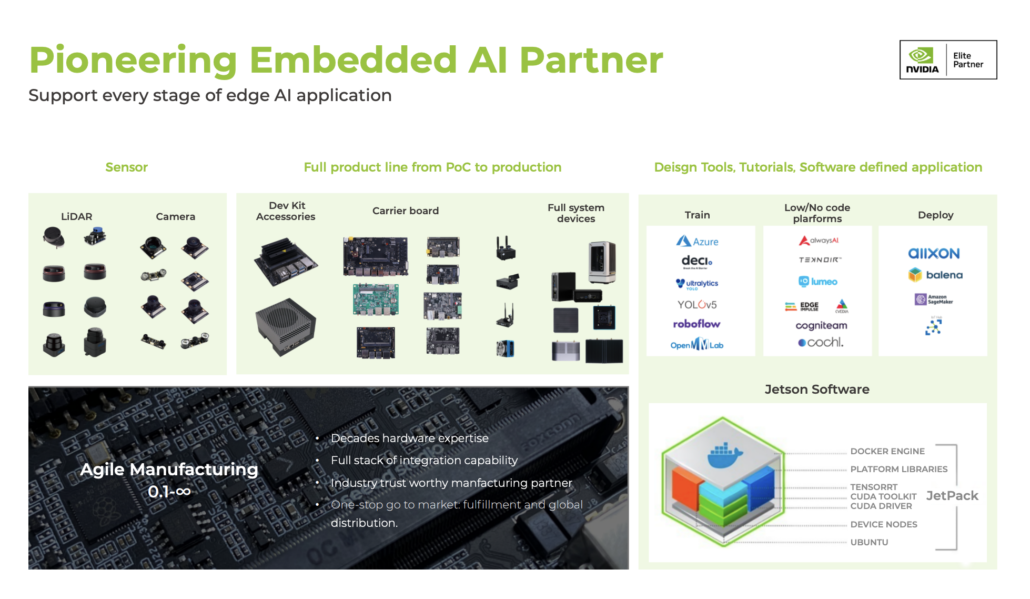

Seeed NVIDIA Jetson Ecosystem

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available. Check out our successful case study catalog to discover more edge AI possibilities!

Discover infinite computer vision application possibilities through our vision AI resource hub!

Take the first step and send us an email at edgeai@seeed.cc to become a part of this exciting journey!

Download our latest Jetson Catalog to find one option that suits you well. If you can’t find the off-the-shelf Jetson hardware solution for your needs, please check out our customization services, and submit a new product inquiry to us at odm@seeed.cc for evaluation.

The post The Future of Generative AI in Video Analytics Using NVIDIA Jetson Orin appeared first on Latest Open Tech From Seeed.